Autonomous systems are becoming increasingly commonplace across all facets of modern life, including hazardous environments, where the choices made by machines have the potential to cause harm or injury to humans. But how well do we understand the decision-making process of artificial intelligence, and how does this affect trust in autonomous systems? In this blog, Professor Michael Fisher, Dr Louise Dennis and Dr Matt Luckcuck outline the recommendations of their new white paper, calling for greater transparency and easier verification in autonomous decision making processes, particularly for systems used in situations where there is a risk to human wellbeing.

- A recent report estimates that the total UK market size for autonomous robotic systems will reach almost £3.5 billion by 2030.

- When autonomous systems are used, designers, users, and regulators must consider not only hardware safety concerns, but also the transparency of decisions made by software.

- This enables users to verify that all identified hazards are reduced as much as reasonably practicable.

- Regulators have a role in setting and enforcing standards of transparency and verification across all sectors that use autonomous systems, if public trust is to be built and maintained.

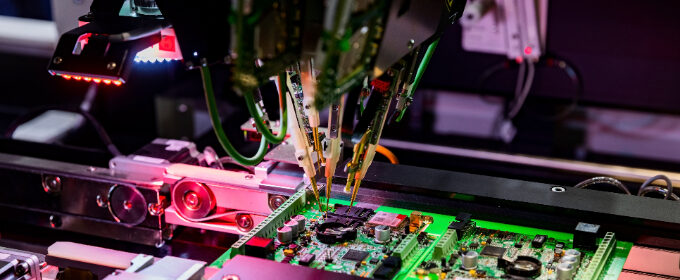

Autonomous systems use software to make decisions without the need for human control. They can be useful for performing tasks that are dirty or dangerous, such as maintaining nuclear reactors; or jobs that are simply dull or distant, like wind turbine repair in the North Sea. In these situations, they are typically embedded in a robotic system to enable physical interaction with the real world, and may be near to humans. This means that autonomous robotic systems are often safety-critical, where their failure can cause human harm or death.

Through the RAIN Hub (Robotics and AI in Nuclear), we have been collaborating with partners at the Office for Nuclear Regulation (ONR). Our white paper, Principles for the Development and Assurance of Autonomous Systems for Safe Use in Hazardous Environments, gives guidance on designing and assuring autonomous systems used in hazardous environments, such as those found in the nuclear industry. Principles like these are important for gaining approval from both regulators and the public. The white paper provides principles to be used in addition to existing standards and guidance aimed at, for example, robotics, electronic systems, control systems, and safety-critical software.

We focus on the software providing the system’s autonomy, because this is the new area of concern for these systems, but remembering the safety implications of the hardware is also important. The white paper has seven high-level recommendations; here we talk about three broad themes of the recommendations.

Assess for ethics

It is common to consider safety and security hazards when deploying systems, but where autonomous software is involved it is also necessary to consider ethical hazards. As with many software design decisions, If ethical hazards are not identified early, they can become ‘baked in’, making them extremely difficult to correct. For example, a hidden bias in the data used to train a machine learning system can become an integral part of the system’s decision-making process, producing systemically biased decision-making.

Any hazard assessment of an autonomous robotic system should include assessing risks that have an ethical impact, as well as those that have safety and security impacts. The British Standard BS 8611 “Ethical Design and Application of Robots and Robotic Systems” provides a standards-based framework for understanding and accounting for ethical impacts when introducing an autonomous robotic system, and could serve as a useful model to build upon.

Your sector isn’t special

While each sector or environment that an autonomous system might be used in will bring its own specific hazards (such as temperature, radiation, pressure, etc.), there are still common challenges introduced by the use of autonomous software. We assert that these common challenges can be tackled in a sector-agnostic way.

Because an autonomous robotic system can interact with its environment, running physical tests in the early phases of its development can be dangerous, difficult, and time-consuming. This means code analysis and simulation are even more important assurance tools than for non-robotic systems. An autonomous system makes its own independent decisions, so we must ensure that the decisions it makes are those we would have made. We also need to consider how the system should react if something, or someone, in its environment does not behave as it expects.

Design for verification

Autonomous components should be as transparent and verifiable as possible, because they may make decisions without human approval. Transparency enables us to examine the decisions being made, and verification involves a range of techniques to determine the correctness of system behaviour. If an autonomous system is not amenable to examination and verification, then assurance and oversight are challenging. Strong mathematical analysis might not be possible for low-level decisions, such as image classification, where other techniques may need to be used. But for high-level choices about the system’s behaviour, high levels of transparency and the use of strong verification techniques are possible and should be considered essential. Our recent survey paper gives an overview of the state-of-the-art in mathematically-based verification techniques.

Part of designing a system with verification in mind involves considering how the system’s requirements are described. The tasks and missions that the system will perform should be clearly defined, to enable accurate verification of the system behaviour. Deploying robotic systems in hazardous environments often requires convincing a regulator (like the ONR) that the system is acceptably safe, so these requirements should be clearly traceable through the design, the development processes, and into the deployed system.

We can use autonomous systems in hazardous environments

When deployed in hazardous environments, autonomous systems bring new challenges to system assurance. But they also bring new opportunities; if designed and introduced properly, they can be used to help workers doing dangerous jobs, inspect areas too hazardous for humans, and be designed to enable the autonomous decisions made by the system to be closely analysed. These benefits come at the price of carefully considering how the system will be introduced and used, and designing the system to be easy to verify.

However, autonomous systems also have the potential to negatively impact the workforce and bystanders in the area that they are used. These impacts include safety and security concerns, but also other ethical issues such as privacy, equality, and human autonomy. These need to be assessed alongside considerations of safety and security, at the beginning of the development process, and the ethical hazards should be designed out where that is possible. Most sectors considering the deployment of autonomous robotic systems in hazardous environments will also have their own, specific hazards. But many of the challenges created by the introduction of an autonomous system will apply no matter what sector it is used in, so they should be tackled in a cross-sector way.

There is a clear role in this for regulators and policymakers to legislate for, and enforce, minimum standards for transparency and verification, based on the best available techniques from research and industry. Systems must be designed so that their software is as transparent as possible and can easily be verified, to demonstrate that all identified hazards are reduced as much as is reasonably practicable. This is important for both regulatory sign-off, and to gain public trust in the safety of these increasingly prevalent software systems.

Policy@Manchester aims to impact lives globally, nationally and locally through influencing and challenging policymakers with robust research-informed evidence and ideas. Visit our website to find out more, and sign up to our newsletter to keep up to date with our latest news.