The Grenfell Tower fire saw 80 or more adults and children die in their homes in an entirely preventable disaster. Here, Gill Kernick, who helps high hazard industries prevent major accidents and personally witnessed the disaster, and Martin Stanley (Editor, Understanding Regulation) argue that the fire was a terrible failure of government, leadership and regulation and consider how the Inquiry team might best ensure that the widest possible lessons are learned, and its recommendations implemented.

- The Inquiry should learn from previous investigations and inquiries into Major Accidents in High Hazard industries

- The view that ‘policies and procedures’ keep us safe, and the problem is the person or operator that didn’t follow them, is far too simplistic and will not lead to understanding deeper systemic issues

- To understand the underlying drivers of behaviour it’s important to investigate the reward and measurement structures (both formal and practiced) in place

- Typically, only around half of the recommendations made by an Inquiry will be implemented and often the corrective actions will either not be taken or will not have the impact intended

- The Grenfell Inquiry should establish up a process for the successful implementation of the recommendations to ensure lasting change

The day after the fire had been extinguished, Gill was interviewed by Matthew Price on the Today programme and has since offered advice to the Inquiry team. She is determined to ensure that the Inquiry should learn from previous investigations and inquiries into Major Accidents in High Hazard industries such as Lord Cullen’s investigation of the Piper Alpha disaster.

Gill emphasises the moral case for including the thinking and learning from previous investigations into major accidents, which have examined the systemic and other failures that resulted in deaths in industries where people had, at least to some degree, knowingly put themselves at risk by the nature of the industry they worked in. In Grenfell, however, adults and children died in their homes. Indications point to similar systemic failures. We must learn the lessons from major accidents and ensure something similar never happens again.

‘The Depressing Sameness’ of Major Accidents

The leadership and management needed to prevent major accidents are different to that required for preventing higher probability, lower consequence events (often referred to as personal safety or ‘slips, trips and falls’). A major accident is a low probability, high consequence event (sometimes referred to as a Black Swan event). Andrew Hopkins in his book ‘Failure to Learn’ about the Texas City Refinery Disaster (2005, 15 deaths) refers to the ‘depressing sameness’ of major accidents.

On 6th July 1988, almost 29 years to the day of the Grenfell Tragedy, the world’s largest oil rig disaster killed 167 people in the North Sea. There are some striking similarities to the Grenfell Tragedy:

- The fire on Piper Alpha took 22 minutes to engulf the platform. The Public Inquiry into the disaster by Lord Cullen judged that the operator had used inadequate maintenance and safety procedures. Major works had been conducted which removed protection. Cost cutting and pressure to produce were in play.

- Of the 61 survivors, many violated procedure by jumping into the Sea. They had been told that doing this would lead to certain death. In Grenfell, residents had been told to stay in their flats. In the face of unprecedented circumstances, regular procedures may not keep us safe.

- Cullen was scathing about the lack of a system or process for coping with a major accident. The same must be said in Grenfell as we look at the absolute failure to take care of victims in the days following the disaster.

A major accident is not the result of a single event, it is a systemic outcome resulting from several latent (pre-existing and often hidden) conditions, usually triggered by an active failure (current failure e.g. human error or an ignition source) aligning at a moment in time that leads to horrific consequences.

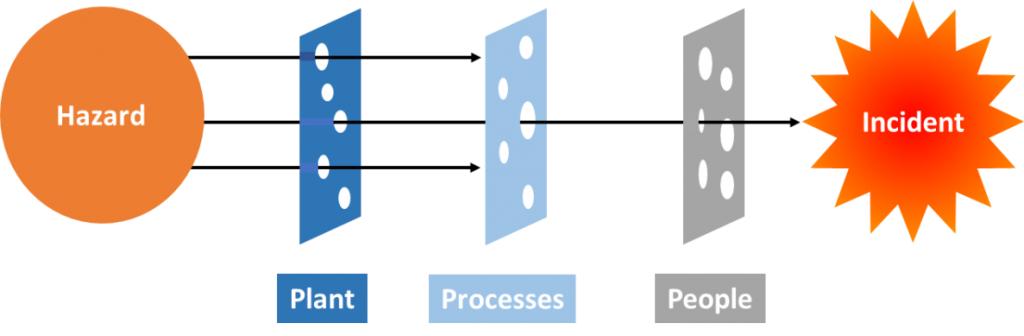

This is illustrated through what is called the James Reason’s ‘Barrier’ or ‘Swiss Cheese’ model; there are barriers in place to protect us from major accidents, often categorised into those to do with the asset or plant; policies/ procedures; and people. In each of these barriers, there are ‘latent conditions’ or holes, which are either known or unknown. In a major accident, these holes line up and a hazard finds its way through all the barriers ending in catastrophic results.

It would be valuable to look at the unfolding of the Grenfell tragedy through this lens. Initial indications point to many latent conditions in the plant (one fire exit, exposed gas pipes, window fitting, cladding etc.), in the processes (stay put policy, processes for safety risk assessment etc.) and in people (TMO and Council relationship with residents, failure to listen).

Preventability

The reaction to major accidents is often that they couldn’t have been foreseen; that the nature of ‘low probability’ events somehow means we can’t prevent them. But it is now well understood that one of the most effective ways of avoiding major accidents is to deploy what is often referred to as mindful leadership or chronic unease; that is looking to imagine and fear the worst thing that could go wrong.

When these horrendous events do happen, there has too often been a shocking failure to learn. For example, in the Texas City disaster, almost every aspect of what went wrong had gone wrong before, either at Texas City or elsewhere.

There may have been a similar learning disability around Grenfell: how is it that little notice appears to have been taken of cladding fires on high rise buildings in France, the UAE and Australia?

Policies and Procedures

Inquiries into previous major incidents have uncovered many instances of policies and procedures that are outdated, inaccurate and contradictory. Holding the view that ‘policies and procedures’ keep us safe, and the problem is the person or operator that didn’t follow them, is far too simplistic and will not lead to understanding deeper systemic issues.

To understand the underlying drivers of behaviour it’s important to investigate the reward and measurement structures (both formal and practiced) in place. In Texas City, incentives were focussed around financial performance with some incentive around personal safety metrics. Attention to process safety or the prevention of major accidents was not encouraged through organisational reward and measurement structures.

It will also be necessary to consider leadership and cultural issues. Indications from residents (both prior to and in the response to the incident) suggest that there may have been a transactional, one-way leadership style that did not welcome or fully understand the views and concerns of residents.

We must also consider the capability of leaders in the Council, K&C TMO and other key stakeholders and contractors. How was their capability developed? What understanding did they have of Major Risk? How were they selected, rewarded etc.?

Looking Forward

It would be a mistake to assume that all parties will be keen to see something better for the future. Whilst the tragedy is seared on the conscience of the nation, there are many parties protecting their own interests and driving their own agendas.

But certain approaches – especially to human error – will improve the chances that lessons will be learned. Sydney Dekker summarises a new perspective that, if taken, will enable the Inquiry to delve into the deeper systemic issues.

| The Old View of human error on what goes wrong | The New View of human error on what goes wrong |

| Human error is a cause of trouble | Human error is symptomatic of trouble deeper inside the system |

| To explain failure, you must seek failures (errors, violations, incompetence, mistakes) | To explain failure, do not try to find where people went wrong |

| You must find people’s inaccurate assessments wrong decisions, bad judgements | Instead, find how people’s assessments and actions made sense at the time, given the circumstances that surrounded them. |

| The Old View of human error on how to make it right | The New View of human error on how to make it right |

| Complex systems are basically safe | Complex systems are not basically safe |

| Unreliable, erratic humans undermine defenses, rules and regulations | Complex systems are trade-offs between multiple irreconcilable goals (e.g. safety and efficiency) |

| To make systems safer, restrict the human contribution by tighter procedures, automation, supervision | People have to create safety through practice at all levels of an organization. |

Unless particular care is taken, even the best recommendations won’t ensure learning. Typically, only around half of the recommendations made by an Inquiry will be implemented. In many cases the corrective actions will either not be taken or will not have the impact intended. One recent example is that the strengthening work recommended as a result of the collapse of Ronan Point in Newham (1968, killing four people) was never carried out at Ledbury Towers, South London.

But the Cullen Report into Piper Alpha did lead to lasting systemic change. All the 106 recommendations made were accepted. Lord Cullen said: “The industry suffered an enormous shock with this inquiry, it was the worst possible, imaginable thing. Each company was looking for itself to see whether this could happen to them, what they could do about it. This all contributed to a will to see that something better for the future could be evolved.”

The Grenfell Inquiry should therefore establish up a process for the successful implementation of the recommendations to ensure lasting change. Jim Wetherbee recommends appointing a single person accountable for implementation of the recommendations and a process for doing so. Failure to consider the implementation of recommendations during the Inquiry could severely limit its impact.

- Gill Kernick will be maintaining a close interest in the Inquiry with a view to ensure that sensible lessons are learned and recommendations implemented. If you would like to keep in touch with her activities and/or help in any way, please drop her an email at gillkernick@msn.com

- Gill’s detailed advice to the Inquiry team may be read here.

- Her recommendations draw heavily upon the thinking of Jim Wetherbee (author of Controlling Risk in an Unsafe World), Sydney Dekker (The Field Guide to Understanding Human Error and Just Culture) and James Reason (author of The Human Contribution and Human Error).