This is the thirty-seventh in a series of posts by Dr Robert Ford, Dr Will Jennings, Dr Mark Pickup and Prof Christopher Wlezien that report on the state of the parties in the UK as measured by opinion polls. By pooling together all the available polling evidence, the impact of the random variation that each individual survey inevitably produces can be reduced.

Most of the short term advances and setbacks in party polling fortunes are nothing more than random noise; the underlying trends – in which the authors are interested and which best assess the parties’ standings – are relatively stable and little influenced by day-to-day events. If there can ever be a definitive assessment of the parties’ standings, this is it. Further details of the method we use to build our estimates of public opinion can be found here.

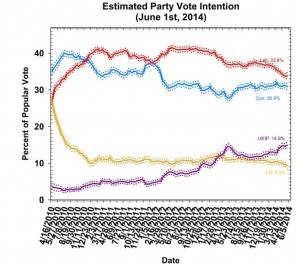

This month’s Polling Observatory comes in the aftermath of the European Parliament elections and the so-called UKIP earthquake for the electoral landscape.

Despite much volatility in the polls ahead of those elections, with a few even putting the Conservatives ahead of Labour for the first time in over two years, underlying support for both main parties remained stable over the course of the month.

Labour may have fallen early in the month in the run-up to the European elections, or the Conservative leads may have been the result of random variation. In any event, by the end of the month, we had Labour polling at 33.8%, just 0.2 points down on their support a month ago. The Conservatives are also broadly flat at 30.9%, 0.3 points below their standing a month ago.

The Lib Dems have suffered slightly more of a post-election hangover, perhaps set back by infighting over the botched coup by Lord Oakeshott and the widespread ridicule over the Clegg/Cable beer-pulling photo op, on 9.3%, down 0.4 points. UKIP support remained stable at record high levels, as they enjoyed a moment in the limelight around the European Parliament elections. We have them rising 0.2 points on last month to 14.9%, their highest support level to date. Note that all these figures are based on our adjusted methodology, which is explained in detail below.

It is noticeable that while Labour’s support has been in decline for the last six to nine months (having plateaued for a period before that) underlying Conservative support has remained incredibly stable around the 31% level. In fact, setting aside the slight slump around the time of the last UKIP surge at the 2013 local elections, their standing with the electorate has been flat since its crash of April 2012 around the time of the ‘omnishambles’ budget.

The narrowing in Labour’s lead over the past year is entirely the result of Labour losing support, not of the Conservatives gaining it. We have written at length previously about how the fate of the Liberal Democrats was sealed in late 2010, and as such it is remarkable that in this parliament there has been so little movement in the polls for the parties in government.

The prevalent anti-politics mood out in the country and continued pessimism about personal/household finances has meant that neither of the Coalition partners have yet been able to convert the economic recovery into a political recovery. Instead, both are gaining ground relatively as the main opposition party also leaks support, perhaps also succumbing to the anti-Europe, anti-immigration, anti-Westminster politics of UKIP.

As explained in our methodological mission statement, our method estimates current electoral sentiment by pooling all the currently available polling data, while taking into account the estimated biases of the individual pollsters (“house effects”).

Our method therefore treats the 2010 election result as a reference point for judging the accuracy of pollsters, and adjusts the poll figures to reflect the estimated biases in the pollsters figures based on this reference point. Election results are the best available test of the accuracy of pollsters, so when we started our Polling Observatory estimates, the most recent general election was the obvious choice to “anchor” our statistical model.

However, the political environment has changed dramatically since the Polling Observatory began, and over time we have become steadily more concerned that the changes have rendered our method out of date. Yet changing the method of estimation is also costly, as it interrupts the continuity of our estimates, and makes it harder to compare our current estimate with the figures we reported in our past monthly updates.

There were three concerns about the general election anchoring method. Firstly, it was harsh on the Liberal Democrats, who were over-estimated by pollsters ahead of 2010 but have been scoring very low in the polls ever since they lost over half their general election support after joining the Coalition. The negative public views of the Liberal Democrats, and their very different political position as a party of government, make it less likely that the current polls are over-estimating their underlying support.

Secondly, a general election anchor provides little guidance on UKIP, who scored only 3% in the general election but poll in the mid-teens now, but with large disagreements in estimated support between pollsters (see discussion of house effects below).

Thirdly, the polling ecosystem itself has changed dramatically since 2010, with several new pollsters starting operations, and several other established pollsters making such significant changes to their methodology that they were equivalent to new pollsters as well.

We have decided that these concerns are sufficiently serious to warrant an adjustment to our methodology. Rather than basing our statistical adjustment on the last general election, we now make adjustments relative to the “average pollster”. This assumes that the polling industry as a whole will not be biased. This assumption could prove wrong, of course, as it did in 2010 (and, in a different way, 1992).

However, it seems pretty likely that any systematic bias in 2015 will look very different to 2010, and as we have no way of knowing what the biases in the next election might be, we apply the “average pollster” method as the best interim guide to underlying public opinion.

This change in our methodology has a slight negative impact on our current estimates for both leading parties. Labour would be 34.5% if anchored against the 2010 election, rather than the new estimate of 33.8%, while the Conservatives would be on 31.5% rather than 30.9%. Yet as both parties fall back by the same amount, their relative position is unchanged. UKIP gain slightly from the new methodology – our new estimate is now 14.9%, under the old method they would score 14.5%. However, the big gainers are the Lib Dems, who were punished under our old method for their strong polling in advance of the 2010 general election. We now estimate their vote share is estimated at 9.3%, significantly above the anaemic 6.7% estimate produced under the previous method.

This is in line with our expectations in earlier discussions of the method in previous posts. It is worth noting that none of these changes affect the overall trends in public opinion that we have been tracking over the last few years, as will be clear from the charts above.

The European Parliament elections prompted the usual inquest into who among the nation’s pollsters had the lowest average error of the final polls compared against the result (see here). We cannot simply extrapolate the accuracy of polling for the European elections to next year’s general election.

For one thing, these sorts of ‘final poll league table’ are subject to sampling error, making it extremely difficult to separate the accuracy of the polls once this is taken into account (as we have shown here). Nevertheless, with debate likely to continue to rage over the extent of the inroads being made by UKIP as May 2015 approaches, some of the differences observed in the figures reported by the polling companies will come increasingly under the spotlight.

These ‘house effects’ are interesting in themselves because they provide us with prior information about whether an apparent high or low poll rating for a party, reported by a particular pollster, is likely to reflect an actual change in electoral sentiment or is more likely be down the particular patterns of support associated with the pollster.

Our new method makes it possible to estimate the ‘house effect’ for each polling company for each party, relative to the vote intention figures we would expect from the average pollster. That is, it tells us simply whether the reported vote intention for a given pollster is above or below the industry average. This does not indicate ‘accuracy’, since there is no election to benchmark the accuracy of the polls against. It could be, in fact, that pollsters at one end of the extreme or the other are giving a more accurate picture of voters’ intentions – but an election is the only real test, and even that is imperfect.

In the table below, we report all current polling companies’ ‘bias’ for each of the parties. We also report details of whether the mode of polling is telephone or Internet-based, and adjustments used to calculate the final headline figures (such as weighting by likelihood to vote or voting behaviour at the 2010 election). From this, it is quickly apparent that the largest range of house effects come in the estimation of UKIP support, and seem to be associated with the method a pollster employs to field a survey.

All the companies who poll by telephone (except Lord Ashcroft’s new weekly poll) tend to give low scores to UKIP. By contrast, three of the five companies which poll using internet panels give higher than average estimates for UKIP. ComRes provide a particularly interesting example of this “mode effect”, as they conduct polls with overlapping fieldwork periods by telephone and internet panel. The ComRes telephone-based polls give UKIP support levels well below average, while the web polls give support levels well above it.

It is not clear what is driving this methodological difference – something seems to be making people more reluctant to report UKIP support over the telephone, more eager to report it over the internet, or both. The diversity of estimates most likely reflects the inherent difficulty of accurately estimating support for a new party whose overall popularity has risen rapidly, and where the pollsters have little previous information to use to calibrate their estimates.

| House | Mode | Adjustment | Prompt | Con | Lab | Lib Dem | UKIP |

| ICM | Telephone | Past vote, likelihood to vote | UKIP prompted if ‘other’ | 1.3 | -0.9 | 2.8 | -2.4 |

| Ipsos-MORI | Telephone | Likelihood (certain) to vote | Unprompted | 0.5 | 0.4 | 0.5 | -1.6 |

| Lord Ashcroft | Telephone | Likelihood to vote, past vote (2010) | UKIP prompted if ‘other’ | -0.7 | -0.8 | -1.2 | 0.9 |

| ComRes (1) | Telephone | Past vote, squeeze, party identification | UKIP prompted if ‘other’ | 0.6 | 0.0 | 0.2 | -2.5 |

| ComRes (2) | Internet | Past vote, squeeze, party identification | UKIP prompted if ‘other’ | 0.3 | -0.7 | -1.0 | 1.8 |

| YouGov | Internet | Newspaper readership, party identification (2010) | UKIP prompted if ‘other’ | 1.9 | 2.1 | -1.3 | -0.2 |

| Opinium | Internet | Likelihood to vote | UKIP prompted if ‘other’ | -0.8 | -0.9 | -2.3 | 3.0 |

| Survation | Internet | Likelihood to vote, past vote (2010) | UKIP prompted | -1.8 | -1.5 | -0.2 | 4.4 |

| Populus | Internet | Likelihood to vote, party identification (2010) | UKIP prompted if ‘other’ | 2.3 | 1.5 | 0.2 | -2.2 |

[…] Original source – Manchester Policy Blogs […]